description:

This project—PerfectFit—is a collaboration between The University of Tokyo, and Université Paris-Saclay.PerfectFit is a collaborative framework including cloth designers and their customers to help them co-design a virtual garment.

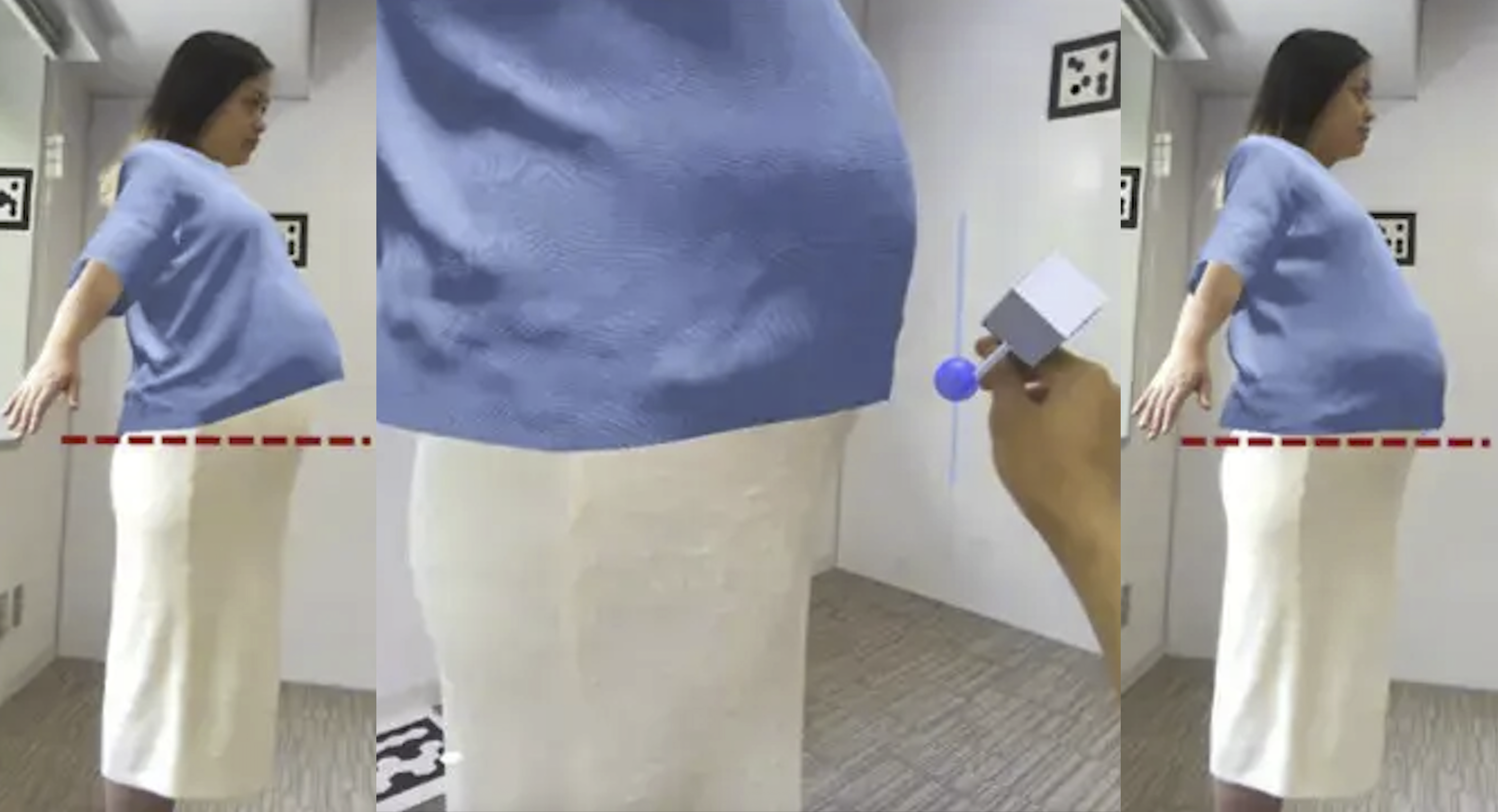

In this demo we visualised the virtual garment through Canon MREAL X1 glasses. The designers could view and adjust virtual clothes on the client’s body in real time and interact with the garment using a tracked physical pointing stylus or by natural hand-gestures to edit the garment’s dimensions. The system used a Kinect Azure depth sensor to track the client’s body motion, reconstruct a 3D avatar as an SMPL model which affectd the digital garment simulation.

The concept of this work addresses fashion waste caused by mass production—exploring whether AR can enable virtual design, tailoring and fitting of garments to reduce the number of the re-design loops during production.

contributions:

TWe used several ML-based frameworks: MediaPipe for hand-tracking and NVIDIA segmentation models for real-time hand masking. We implemented position-based dynamics to simulate cloth behavior in response to the user’s motion. Additionally, Canon MREAL X1 AR glasses require high computation resources. We were limited to two laptops with an RTX 4090. Therefore, the project required extensive performance optimization and we had to distribute the components across the two machines to maintain real-time performance. My role focused on identifying the optimal distribution strategy for system modules, ensuring seamless execution.