description:

This project—Phases (AR)—was a collaboration between Université Paris-Saclay, Las Vegas-based artist Brett Bolton, Notch, and Canon Japan.

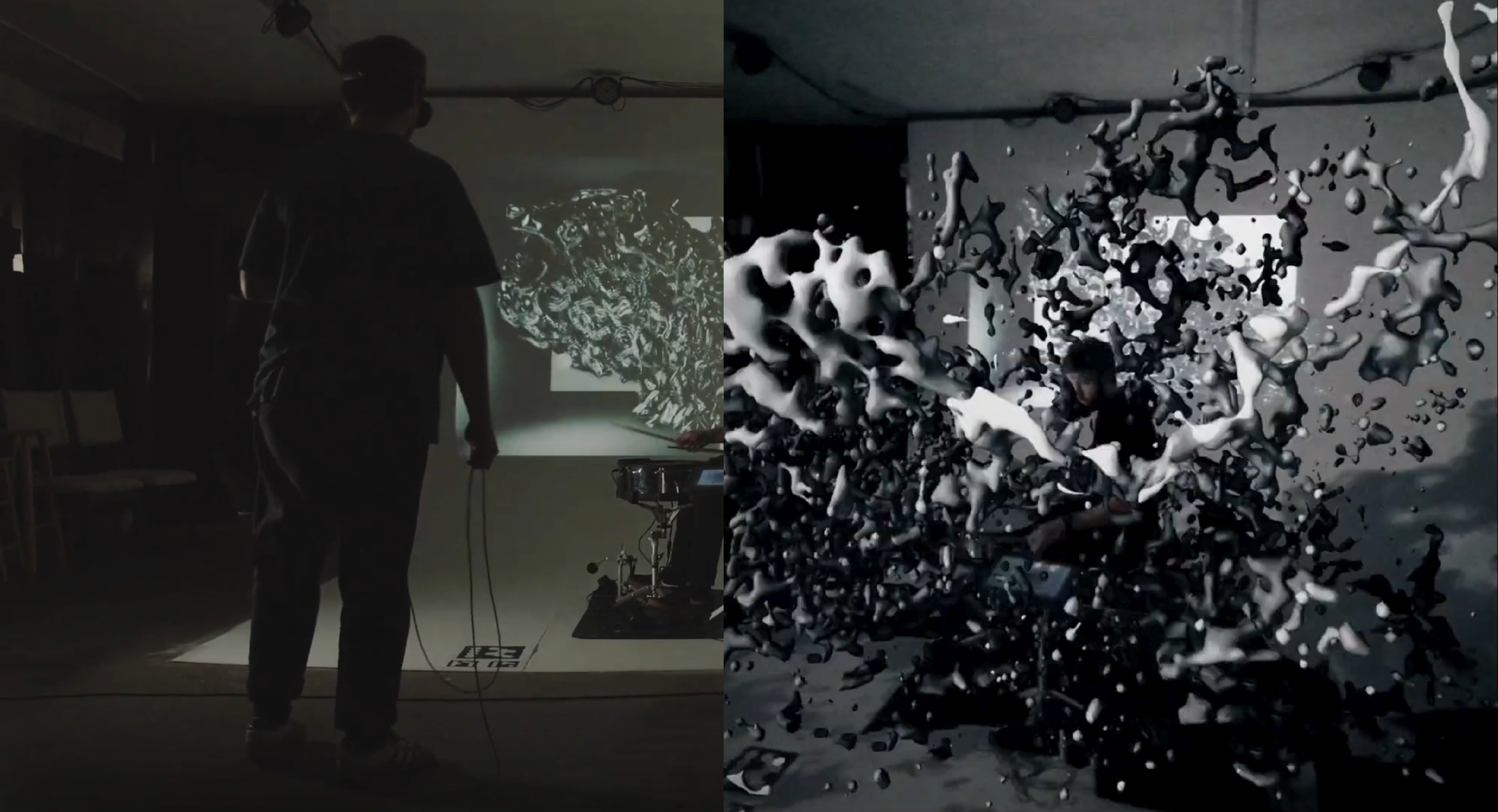

We integrated AR visuals into Brett’s existing Phases performance. During the live show, Brett played electronic drums and MIDI controllers, while two audience members on stage wore Canon MREAL X1 AR glasses and experienced an enhanced performance with additional AR graphics. To realistically simulate occlusions between the real and the virtual worlds, we used an Azure Kinect depth sensor and aligned it with the HMD’s coordinate system. Using this technique, we could use the live performer’s silhouette to occlude AR elements behind him.

The core concept of our work questions whether AR could restore human presence at live events, fostering shared experiences rather than staring into our phones.

contributions:

My role bridged the technical and creative sides of the project. I developed the Kinect–MREAL calibration system and built custom tools, for example, a recorder to capture 3D spatial performance data of Brett as seen through the AR glasses. This allowed the team to play back and simulate Brett’s performance without meeting him in-person. I facilitated cross-team communication between team members working across different locations. I also led the online rehearsal in front of the Real-Time Live! committee to demonstrate our setup before the final show. Finally, I was responsible for running the AR part of the show on the RTL! stage.